化工学报 ›› 2025, Vol. 76 ›› Issue (2): 769-786.DOI: 10.11949/0438-1157.20240835

• 过程系统工程 • 上一篇

收稿日期:2024-07-23

修回日期:2024-09-06

出版日期:2025-03-25

发布日期:2025-03-10

通讯作者:

王振雷

作者简介:付文峰(2000—),男,硕士研究生,ff187499@163.com

基金资助:

Wenfeng FU1( ), Zhenlei WANG1(

), Zhenlei WANG1( ), Xin WANG2

), Xin WANG2

Received:2024-07-23

Revised:2024-09-06

Online:2025-03-25

Published:2025-03-10

Contact:

Zhenlei WANG

摘要:

在复杂工业生产过程中,为提高产品质量和生产效率,建立准确的工业运行状态性能等级评估模型十分重要。近年来,深度学习技术在这个领域中取得了一些进展。然而,实际工业生产过程中经常遇到数据样本不平衡情况,现有的深度学习性能评估方法在有限的少数样本中挖掘有价值的特征信息能力不佳,从而导致性能评估准确度低。为此,设计了一种双变分自编码器权重特征自适应融合的生成对抗网络(generative adversarial network based on weighted adaptive feature fusion network of double variational autoencoder,DVAE-WAFFN-GAN),对较少类别样本进行增强,提高了性能评估的准确度。该方法将VAE和GAN网络进行结合,首先用稀少类数据去预训练卷积变分自编码器(CNN-VAE)和长短时记忆变分自编码器(LSTM-VAE)提取真实数据的时空特征信息。训练生成网络时,随机噪声先输入到预训练的两个解码器中,解码器输出真实样本编码后的特征向量,再利用注意力机制设计权重自适应特征融合网络(WAFFN)对两个变分自编码器解码器输出的特征向量赋予不同权重进行融合,利用融合后的特征向量代替原始GAN中的随机噪声去生成数据,从而提高生成器生成数据的质量,提高性能评估的准确率。最后将该方法在样本不平衡的工业数据集上进行仿真实验。

中图分类号:

付文峰, 王振雷, 王昕. 基于DVAE-WAFFN-GAN的不平衡样本的工业过程性能评估方法[J]. 化工学报, 2025, 76(2): 769-786.

Wenfeng FU, Zhenlei WANG, Xin WANG. An industrial process performance evaluation method based on unbalanced samples generated by DVAE-WAFFN-GAN[J]. CIESC Journal, 2025, 76(2): 769-786.

| 网络名称 | 结构组成 | 主要参数 |

|---|---|---|

| CNN-VAE | 输入层 | 神经元个数x |

| 一维卷积+BN+Relu | 卷积参数(1,16,15,1,0) | |

| 一维卷积+BN+Relu | 卷积参数(16,64,21,1,0) | |

| FLA+全连接层+Sigmoid | 神经元个数(1152,20) | |

| 全连接层+UNFLA | 神经元个数(20,1152) | |

| 一维反卷积+BN+Relu | 卷积参数(64,16,21,1,0) | |

| 一维反卷积+BN+Relu | 卷积参数(16,1,15,1,0) |

表1 CNN-VAE模型参数

Table 1 CNN-VAE model parameter

| 网络名称 | 结构组成 | 主要参数 |

|---|---|---|

| CNN-VAE | 输入层 | 神经元个数x |

| 一维卷积+BN+Relu | 卷积参数(1,16,15,1,0) | |

| 一维卷积+BN+Relu | 卷积参数(16,64,21,1,0) | |

| FLA+全连接层+Sigmoid | 神经元个数(1152,20) | |

| 全连接层+UNFLA | 神经元个数(20,1152) | |

| 一维反卷积+BN+Relu | 卷积参数(64,16,21,1,0) | |

| 一维反卷积+BN+Relu | 卷积参数(16,1,15,1,0) |

| 网络名称 | 结构组成 | 主要参数 |

|---|---|---|

| LSTM-VAE | 输入层 | 神经元个数x |

| 全连接层+Sigmoid | 神经元个数(x,32) | |

| LSTM | LSTM参数(32,20,2) | |

| LSTM | LSTM参数(20,32,2) | |

| 全连接层+Sigmoid | 卷积参数(32,x) |

表2 LSTM-VAE模型参数

Table 2 LSTM-VAE model parameter

| 网络名称 | 结构组成 | 主要参数 |

|---|---|---|

| LSTM-VAE | 输入层 | 神经元个数x |

| 全连接层+Sigmoid | 神经元个数(x,32) | |

| LSTM | LSTM参数(32,20,2) | |

| LSTM | LSTM参数(20,32,2) | |

| 全连接层+Sigmoid | 卷积参数(32,x) |

| 网络名称 | 结构组成 | 主要参数 |

|---|---|---|

| 生成器 | 全连接层+Sigmoid | 神经元个数(2x,52) |

| 全连接层+Sigmoid | 神经元个数(52,20) | |

| 全连接层+Sigmoid | 神经元个数(20,x) | |

| 判别器 | 全连接层+Sigmoid | 神经元个数(x,26) |

| 全连接层+Sigmoid | 神经元个数(26,12) | |

| 全连接层+Sigmoid | 神经元个数(12,1) |

表3 GAN模型参数

Table 3 GAN model parameter

| 网络名称 | 结构组成 | 主要参数 |

|---|---|---|

| 生成器 | 全连接层+Sigmoid | 神经元个数(2x,52) |

| 全连接层+Sigmoid | 神经元个数(52,20) | |

| 全连接层+Sigmoid | 神经元个数(20,x) | |

| 判别器 | 全连接层+Sigmoid | 神经元个数(x,26) |

| 全连接层+Sigmoid | 神经元个数(26,12) | |

| 全连接层+Sigmoid | 神经元个数(12,1) |

| 网络名称 | 结构组成 | 主要参数 |

|---|---|---|

| LSTM | 输入层 | 神经元个数x |

| 全连接层+Sigmoid | 神经元个数(x,32) | |

| LSTM | LSTM参数(32,20,2) | |

| LSTM | LSTM参数(20,32,2) | |

| 全连接层+Sigmoid | 卷积参数(32,y) |

表4 LSTM模型参数

Table 4 LSTM model parameter

| 网络名称 | 结构组成 | 主要参数 |

|---|---|---|

| LSTM | 输入层 | 神经元个数x |

| 全连接层+Sigmoid | 神经元个数(x,32) | |

| LSTM | LSTM参数(32,20,2) | |

| LSTM | LSTM参数(20,32,2) | |

| 全连接层+Sigmoid | 卷积参数(32,y) |

| 实验方法 | 方法主要描述说明 |

|---|---|

| LSTM(no sampling) | 不进行任何数据增强处理,直接用基础模型对原数据集进行性能评估 |

| VAE-LSTM | 直接用VAE对稀少类进行增强,再对新数据集进行性能评估 |

| LSTM(SMOTE) | 先对数据较少的那一类用SMOTE方法进行数据增强,再对新数据集进行性能评估 |

| NGAN-LSTM | 使用GAN网络对数据较少的类进行增强,再对新数据集进行性能评估 |

| CVGAN-LSTM | 先用较少类的样本训练卷积变分自编码器,利用卷积变分自编码器真实样本编码后的隐变量代替原始GAN中的随机噪声,从而对数据进行增强,再对新数据集进行性能评估 |

| LVGAN-LSTM | 先用较少类的样本训练LSTM变分自编码器,利用LSTM变分自编码器真实样本编码后的隐变量代替原始GAN中的随机噪声,从而对数据进行增强,再对新数据集进行性能评估 |

| DVGAN-LSTM | 先用较少类的样本训练卷积变分自编码器和LSTM变分自编码器,利用两个变分自编码真实样本编码后的隐变量直接代替原始GAN中的随机噪声,再对新数据集进行性能评估 |

表5 实验对比方法说明

Table 5 Description of the experimental comparison method

| 实验方法 | 方法主要描述说明 |

|---|---|

| LSTM(no sampling) | 不进行任何数据增强处理,直接用基础模型对原数据集进行性能评估 |

| VAE-LSTM | 直接用VAE对稀少类进行增强,再对新数据集进行性能评估 |

| LSTM(SMOTE) | 先对数据较少的那一类用SMOTE方法进行数据增强,再对新数据集进行性能评估 |

| NGAN-LSTM | 使用GAN网络对数据较少的类进行增强,再对新数据集进行性能评估 |

| CVGAN-LSTM | 先用较少类的样本训练卷积变分自编码器,利用卷积变分自编码器真实样本编码后的隐变量代替原始GAN中的随机噪声,从而对数据进行增强,再对新数据集进行性能评估 |

| LVGAN-LSTM | 先用较少类的样本训练LSTM变分自编码器,利用LSTM变分自编码器真实样本编码后的隐变量代替原始GAN中的随机噪声,从而对数据进行增强,再对新数据集进行性能评估 |

| DVGAN-LSTM | 先用较少类的样本训练卷积变分自编码器和LSTM变分自编码器,利用两个变分自编码真实样本编码后的隐变量直接代替原始GAN中的随机噪声,再对新数据集进行性能评估 |

| 故障编号 | 故障描述 | 类型 |

|---|---|---|

| 1 | A/C进料比值变化,B含量不变(管道4) | 阶跃 |

| 2 | B含量变化,A/C进料比值不变(管道4) | 阶跃 |

| 3 | 物料D的温度发生变化 | 阶跃 |

| 4 | 反应器冷却水入口温度变化 | 阶跃 |

| 5 | 冷凝器冷却水入口温度变化 | 阶跃 |

| 6 | A供给量损失(管道1) | 阶跃 |

| 7 | C供给管压力头损失(管道4) | 阶跃 |

| 8 | A、B、C供给量变化(管道4) | 随机 |

| 9 | D进料温度变化(管道2) | 随机 |

| 10 | C进料温度变化(管道2) | 随机 |

| 11 | 反应器冷却水入口温度变化 | 随机 |

| 12 | 冷凝器冷却水入口温度 | 随机 |

| 13 | 反应动力学参数 | 缓慢漂移 |

| 14 | 反应器冷却水阀 | 阀黏滞 |

| 15 | D进料温度变化(管道2) | 阀黏滞 |

| 16 | 未知 | 未知 |

| 17 | 未知 | 未知 |

| 18 | 未知 | 未知 |

| 19 | 未知 | 未知 |

| 20 | 未知 | 未知 |

| 21 | 阀门位置(管道4) | 卡阀 |

表6 TE过程数据描述

Table 6 TE process data description

| 故障编号 | 故障描述 | 类型 |

|---|---|---|

| 1 | A/C进料比值变化,B含量不变(管道4) | 阶跃 |

| 2 | B含量变化,A/C进料比值不变(管道4) | 阶跃 |

| 3 | 物料D的温度发生变化 | 阶跃 |

| 4 | 反应器冷却水入口温度变化 | 阶跃 |

| 5 | 冷凝器冷却水入口温度变化 | 阶跃 |

| 6 | A供给量损失(管道1) | 阶跃 |

| 7 | C供给管压力头损失(管道4) | 阶跃 |

| 8 | A、B、C供给量变化(管道4) | 随机 |

| 9 | D进料温度变化(管道2) | 随机 |

| 10 | C进料温度变化(管道2) | 随机 |

| 11 | 反应器冷却水入口温度变化 | 随机 |

| 12 | 冷凝器冷却水入口温度 | 随机 |

| 13 | 反应动力学参数 | 缓慢漂移 |

| 14 | 反应器冷却水阀 | 阀黏滞 |

| 15 | D进料温度变化(管道2) | 阀黏滞 |

| 16 | 未知 | 未知 |

| 17 | 未知 | 未知 |

| 18 | 未知 | 未知 |

| 19 | 未知 | 未知 |

| 20 | 未知 | 未知 |

| 21 | 阀门位置(管道4) | 卡阀 |

| 故障类型 | 状态等级 | 等级标签 |

|---|---|---|

| 正常 | 最优 | 1000 |

| 故障4 | 良好 | 0100 |

| 故障5 | 中等 | 0010 |

| 故障11 | 较差 | 0001 |

表7 数据运行状态等级划分及等级标签设置

Table 7 Data running status level division and level label setting

| 故障类型 | 状态等级 | 等级标签 |

|---|---|---|

| 正常 | 最优 | 1000 |

| 故障4 | 良好 | 0100 |

| 故障5 | 中等 | 0010 |

| 故障11 | 较差 | 0001 |

| 运行状态 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 最优 | 94.92% | 94.21% | 93.74% | 93.21% | 94.50% | 94.08% | 94.42% | 94.32% | 95.58% |

| 良好 | 31.67% | 53.48% | 52.85% | 40.00% | 43.67% | 52.30% | 54.58% | 57.54% | 60.83% |

| 中等 | 95.25% | 95.36% | 95.25% | 95.62% | 96.25% | 94.58% | 95.33% | 95.35% | 95.41% |

| 较差 | 85.40% | 84.65% | 83.93% | 83.40% | 87.05% | 82.50% | 85.83% | 86.75% | 86.17% |

| 总准确率 | 77.06% | 81.93% | 81.44% | 78.06% | 80.37% | 80.83% | 82.54% | 83.49% | 84.50% |

表8 TE过程运行状态良好、不平衡比为10∶1时评估性能等级的准确率

Table 8 Performance evaluation accuracy of TE in good working condition for unbalance ratio 10∶1

| 运行状态 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 最优 | 94.92% | 94.21% | 93.74% | 93.21% | 94.50% | 94.08% | 94.42% | 94.32% | 95.58% |

| 良好 | 31.67% | 53.48% | 52.85% | 40.00% | 43.67% | 52.30% | 54.58% | 57.54% | 60.83% |

| 中等 | 95.25% | 95.36% | 95.25% | 95.62% | 96.25% | 94.58% | 95.33% | 95.35% | 95.41% |

| 较差 | 85.40% | 84.65% | 83.93% | 83.40% | 87.05% | 82.50% | 85.83% | 86.75% | 86.17% |

| 总准确率 | 77.06% | 81.93% | 81.44% | 78.06% | 80.37% | 80.83% | 82.54% | 83.49% | 84.50% |

| 运行状态 | LSTM(no sampling) | AdaBoost | VAE-LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 最优 | 89.12% | 93.27% | 92.32% | 96.25% | 94.12% | 95.12% | 93.75% | 93.42% | 93.75% |

| 良好 | 90.00% | 92.64% | 94.56% | 92.27% | 94.67% | 95.41% | 94.17% | 94.83% | 94.58% |

| 中等 | 40.83% | 54.48% | 52.82% | 46.85% | 48.33% | 50.58% | 52.08% | 56.45% | 58.02% |

| 较差 | 77.50% | 76.55% | 76.75% | 72.64% | 77.83% | 82.50% | 78.00% | 78.65% | 79.58% |

| 总准确率 | 74.36% | 79.24% | 79.11% | 77.00% | 78.73% | 78.75% | 79.50% | 80.73% | 81.48% |

表9 TE过程运行状态中等、不平衡比为10∶1时评估性能等级的准确率

Table 9 Performance evaluation accuracy of TE in average working condition for unbalance ratio 10∶1

| 运行状态 | LSTM(no sampling) | AdaBoost | VAE-LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 最优 | 89.12% | 93.27% | 92.32% | 96.25% | 94.12% | 95.12% | 93.75% | 93.42% | 93.75% |

| 良好 | 90.00% | 92.64% | 94.56% | 92.27% | 94.67% | 95.41% | 94.17% | 94.83% | 94.58% |

| 中等 | 40.83% | 54.48% | 52.82% | 46.85% | 48.33% | 50.58% | 52.08% | 56.45% | 58.02% |

| 较差 | 77.50% | 76.55% | 76.75% | 72.64% | 77.83% | 82.50% | 78.00% | 78.65% | 79.58% |

| 总准确率 | 74.36% | 79.24% | 79.11% | 77.00% | 78.73% | 78.75% | 79.50% | 80.73% | 81.48% |

| 运行状态 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 最优 | 90.16% | 92.46% | 91.72% | 92.50% | 91.26% | 92.22% | 91.65% | 90.48% | 91.75% |

| 良好 | 91.15% | 93.58% | 93.36% | 92.25% | 93.25% | 94.21% | 93.57% | 94.75% | 94.32% |

| 中等 | 89.23% | 93.75% | 92.67% | 92.18% | 93.65% | 94.28% | 95.15% | 95.25% | 95.75% |

| 较差 | 37.50% | 50.42% | 50.87% | 39.50% | 42.36% | 48.30% | 51.14% | 55.32% | 56.28% |

| 总准确率 | 77.01% | 82.55% | 82.15% | 79.11% | 80.13% | 82.25% | 82.92% | 83.95% | 84.53% |

表10 TE过程运行状态较差、不平衡比为10∶1时评估性能等级的准确率

Table 10 Performance evaluation accuracy of TE in bad working condition for unbalance ratio 10∶1

| 运行状态 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 最优 | 90.16% | 92.46% | 91.72% | 92.50% | 91.26% | 92.22% | 91.65% | 90.48% | 91.75% |

| 良好 | 91.15% | 93.58% | 93.36% | 92.25% | 93.25% | 94.21% | 93.57% | 94.75% | 94.32% |

| 中等 | 89.23% | 93.75% | 92.67% | 92.18% | 93.65% | 94.28% | 95.15% | 95.25% | 95.75% |

| 较差 | 37.50% | 50.42% | 50.87% | 39.50% | 42.36% | 48.30% | 51.14% | 55.32% | 56.28% |

| 总准确率 | 77.01% | 82.55% | 82.15% | 79.11% | 80.13% | 82.25% | 82.92% | 83.95% | 84.53% |

| 不平衡比 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 40∶1 | 5.00% | 24.76% | 20.35% | 9.34% | 16.25% | 22.17% | 27.32% | 34.28% | 36.50% |

| 20∶1 | 15.83% | 36.84% | 32.21% | 21.65% | 30.52% | 38.75% | 42.02% | 48.63% | 50.52% |

| 10∶1 | 31.67% | 53.48% | 52.85% | 40.00% | 43.67% | 52.30% | 54.58% | 57.54% | 60.83% |

| 5∶1 | 52.92% | 62.64% | 59.79% | 53.14% | 56.33% | 61.25% | 66.34% | 70.85% | 72.50% |

| 2∶1 | 85.00% | 89.52% | 88.64% | 88.25% | 88.12% | 89.46% | 91.04% | 91.56% | 92.48% |

表11 TE过程不同不平衡比下性能为良好的评估准确度

Table 11 The evaluation accuracy of the TE performance is good at different unbalance ratios

| 不平衡比 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 40∶1 | 5.00% | 24.76% | 20.35% | 9.34% | 16.25% | 22.17% | 27.32% | 34.28% | 36.50% |

| 20∶1 | 15.83% | 36.84% | 32.21% | 21.65% | 30.52% | 38.75% | 42.02% | 48.63% | 50.52% |

| 10∶1 | 31.67% | 53.48% | 52.85% | 40.00% | 43.67% | 52.30% | 54.58% | 57.54% | 60.83% |

| 5∶1 | 52.92% | 62.64% | 59.79% | 53.14% | 56.33% | 61.25% | 66.34% | 70.85% | 72.50% |

| 2∶1 | 85.00% | 89.52% | 88.64% | 88.25% | 88.12% | 89.46% | 91.04% | 91.56% | 92.48% |

| 不平衡比 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 40∶1 | 17.12% | 28.74% | 32.55% | 20.25% | 26.24% | 30.63% | 35.79% | 35.85% | 38.08% |

| 20∶1 | 32.51% | 37.45% | 36.14% | 32.42% | 36.05% | 39.25% | 43.66% | 44.75% | 46.52% |

| 10∶1 | 40.83% | 53.48% | 52.85% | 44.85% | 48.33% | 50.58% | 52.08% | 56.45% | 58.02% |

| 5∶1 | 50.00% | 62.64% | 59.79% | 52.16% | 57.27% | 63.19% | 65.72% | 68.75% | 71.25% |

| 2∶1 | 82.08% | 89.52% | 88.64% | 83.25% | 85.24% | 88.50% | 90.48% | 90.45% | 91.74% |

表12 TE过程不同不平衡比下性能为中等的评估准确度

Table 12 The evaluation accuracy of the TE performance is average at different unbalance ratios

| 不平衡比 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 40∶1 | 17.12% | 28.74% | 32.55% | 20.25% | 26.24% | 30.63% | 35.79% | 35.85% | 38.08% |

| 20∶1 | 32.51% | 37.45% | 36.14% | 32.42% | 36.05% | 39.25% | 43.66% | 44.75% | 46.52% |

| 10∶1 | 40.83% | 53.48% | 52.85% | 44.85% | 48.33% | 50.58% | 52.08% | 56.45% | 58.02% |

| 5∶1 | 50.00% | 62.64% | 59.79% | 52.16% | 57.27% | 63.19% | 65.72% | 68.75% | 71.25% |

| 2∶1 | 82.08% | 89.52% | 88.64% | 83.25% | 85.24% | 88.50% | 90.48% | 90.45% | 91.74% |

| 不平衡比 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 40∶1 | 3.75% | 20.31% | 21.54% | 5.83% | 15.35% | 21.18% | 25.22% | 30.32% | 34.56% |

| 20∶1 | 12.58% | 25.45% | 36.53% | 15.34% | 23.22% | 32.23% | 39.41% | 45.68% | 48.72% |

| 10∶1 | 37.50% | 46.72% | 48.65% | 39.50% | 42.36% | 48.30% | 51.14% | 54.85% | 56.28% |

| 5∶1 | 42.25% | 50.25% | 51.46% | 44.93% | 47.33% | 58.52% | 62.54% | 65.24% | 68.77% |

| 2∶1 | 70.08% | 72.65% | 74.84% | 71.23% | 75.89% | 77.36% | 78.31% | 80.35% | 82.45% |

表13 TE过程不同不平衡比下性能为较差的评估准确度

Table 13 The evaluation accuracy of the TE performance is bad at different unbalance ratios

| 不平衡比 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 40∶1 | 3.75% | 20.31% | 21.54% | 5.83% | 15.35% | 21.18% | 25.22% | 30.32% | 34.56% |

| 20∶1 | 12.58% | 25.45% | 36.53% | 15.34% | 23.22% | 32.23% | 39.41% | 45.68% | 48.72% |

| 10∶1 | 37.50% | 46.72% | 48.65% | 39.50% | 42.36% | 48.30% | 51.14% | 54.85% | 56.28% |

| 5∶1 | 42.25% | 50.25% | 51.46% | 44.93% | 47.33% | 58.52% | 62.54% | 65.24% | 68.77% |

| 2∶1 | 70.08% | 72.65% | 74.84% | 71.23% | 75.89% | 77.36% | 78.31% | 80.35% | 82.45% |

| 模型 | 模型训练 时间/s | 生成样本 时间/s | 生成单个样本时间/ms |

|---|---|---|---|

| VAE | 246 | 0.154 | 0.770 |

| NGAN | 328 | 0.216 | 1.080 |

| CVGAN | 732 | 0.537 | 2.680 |

| LVGAN | 583 | 0.462 | 2.310 |

| DVGAN | 985 | 0.835 | 4.180 |

| DVGAN-WAFFN | 1196 | 1.175 | 5.870 |

表14 TE仿真实验中不同算法生成稀少数据的时间复杂度

Table 14 The time complexity of generating sparse data by different algorithms in TE simulation experiment

| 模型 | 模型训练 时间/s | 生成样本 时间/s | 生成单个样本时间/ms |

|---|---|---|---|

| VAE | 246 | 0.154 | 0.770 |

| NGAN | 328 | 0.216 | 1.080 |

| CVGAN | 732 | 0.537 | 2.680 |

| LVGAN | 583 | 0.462 | 2.310 |

| DVGAN | 985 | 0.835 | 4.180 |

| DVGAN-WAFFN | 1196 | 1.175 | 5.870 |

| No. | 变量 | 描述 | 单位 |

|---|---|---|---|

| 1 | ρNAP | 裂解原料密度 | kg·m-3 |

| 2 | CNP | 正构烷烃浓度 | % |

| 3 | CIP | 异构烷烃浓度 | % |

| 4 | COLE | 烯烃浓度 | % |

| 5 | CNAP | 环烷烃浓度 | % |

| 6 | CBTX | 芳烃浓度 | % |

| 7 | Ffeed | 原料流量 | t·h-1 |

| 8 | FDS | 稀释蒸汽流量 | t·h-1 |

| 9 | FBfuel | 底部燃料流量 | m3·h-1 |

| 10 | FSfuel | 侧壁燃料流量 | m3·h-1 |

| 11 | 排烟氧含量 | % | |

| 12 | Tg1 | 排烟温度1 | ℃ |

| 13 | Tg2 | 排烟温度2 | ℃ |

| 14 | Fss | 高压蒸汽流量 | kg·h-1 |

| 15 | Tss | 高压蒸汽温度 | ℃ |

| 16 | COT | 裂解出口温度 | ℃ |

| 17 | THK | 初馏点温度 | ℃ |

| 18 | TKK | 终馏点温度 | ℃ |

表15 乙烯裂解炉过程变量

Table 15 Ethylene cracking furnace process variable

| No. | 变量 | 描述 | 单位 |

|---|---|---|---|

| 1 | ρNAP | 裂解原料密度 | kg·m-3 |

| 2 | CNP | 正构烷烃浓度 | % |

| 3 | CIP | 异构烷烃浓度 | % |

| 4 | COLE | 烯烃浓度 | % |

| 5 | CNAP | 环烷烃浓度 | % |

| 6 | CBTX | 芳烃浓度 | % |

| 7 | Ffeed | 原料流量 | t·h-1 |

| 8 | FDS | 稀释蒸汽流量 | t·h-1 |

| 9 | FBfuel | 底部燃料流量 | m3·h-1 |

| 10 | FSfuel | 侧壁燃料流量 | m3·h-1 |

| 11 | 排烟氧含量 | % | |

| 12 | Tg1 | 排烟温度1 | ℃ |

| 13 | Tg2 | 排烟温度2 | ℃ |

| 14 | Fss | 高压蒸汽流量 | kg·h-1 |

| 15 | Tss | 高压蒸汽温度 | ℃ |

| 16 | COT | 裂解出口温度 | ℃ |

| 17 | THK | 初馏点温度 | ℃ |

| 18 | TKK | 终馏点温度 | ℃ |

| 运行状态 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 最优 | 98.62% | 98.35% | 98.74% | 98.25% | 98.50% | 98.78% | 98.73% | 98.50% | 98.81% |

| 中等 | 52.47% | 68.42% | 70.15% | 60.53% | 67.22% | 73.50% | 81.32% | 82.35% | 85.45% |

| 较差 | 98.45% | 98.12% | 98.37% | 98.32% | 98.55% | 98.43% | 98.61% | 98.34% | 98.63% |

| 总准确率 | 83.18% | 88.29% | 89.08% | 85.70% | 88.09% | 90.24% | 92.89% | 93.06% | 94.30% |

表16 乙烯裂解炉运行状态中等、不平衡比为10∶1时评估性能等级的准确率

Table 16 Performance evaluation accuracy of ethylene cracking furnace in average working condition for unbalance ratio 10∶1

| 运行状态 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 最优 | 98.62% | 98.35% | 98.74% | 98.25% | 98.50% | 98.78% | 98.73% | 98.50% | 98.81% |

| 中等 | 52.47% | 68.42% | 70.15% | 60.53% | 67.22% | 73.50% | 81.32% | 82.35% | 85.45% |

| 较差 | 98.45% | 98.12% | 98.37% | 98.32% | 98.55% | 98.43% | 98.61% | 98.34% | 98.63% |

| 总准确率 | 83.18% | 88.29% | 89.08% | 85.70% | 88.09% | 90.24% | 92.89% | 93.06% | 94.30% |

| 运行状态 | LSTM(no sampling) | AdaBoost | VAE-LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 最优 | 98.35% | 98.61% | 98.54% | 98.29% | 98.42% | 98.38% | 98.50% | 98.33% | 98.72% |

| 中等 | 98.23% | 98.42% | 98.32% | 98.45% | 98.36% | 98.50% | 98.48% | 98.45% | 98.54% |

| 较差 | 55.35% | 64.85% | 68.43% | 59.24% | 66.57% | 72.43% | 80.85% | 82.32% | 84.52% |

| 总准确率 | 83.98% | 87.29% | 88.43% | 85.33% | 87.78% | 89.76% | 92.61% | 93.03% | 93.93% |

表17 乙烯裂解炉运行状态较差、不平衡比10∶1时评估性能等级的准确率

Table 17 Performance evaluation accuracy of ethylene cracking furnace in bad working condition for unbalance ratio 10∶1

| 运行状态 | LSTM(no sampling) | AdaBoost | VAE-LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 最优 | 98.35% | 98.61% | 98.54% | 98.29% | 98.42% | 98.38% | 98.50% | 98.33% | 98.72% |

| 中等 | 98.23% | 98.42% | 98.32% | 98.45% | 98.36% | 98.50% | 98.48% | 98.45% | 98.54% |

| 较差 | 55.35% | 64.85% | 68.43% | 59.24% | 66.57% | 72.43% | 80.85% | 82.32% | 84.52% |

| 总准确率 | 83.98% | 87.29% | 88.43% | 85.33% | 87.78% | 89.76% | 92.61% | 93.03% | 93.93% |

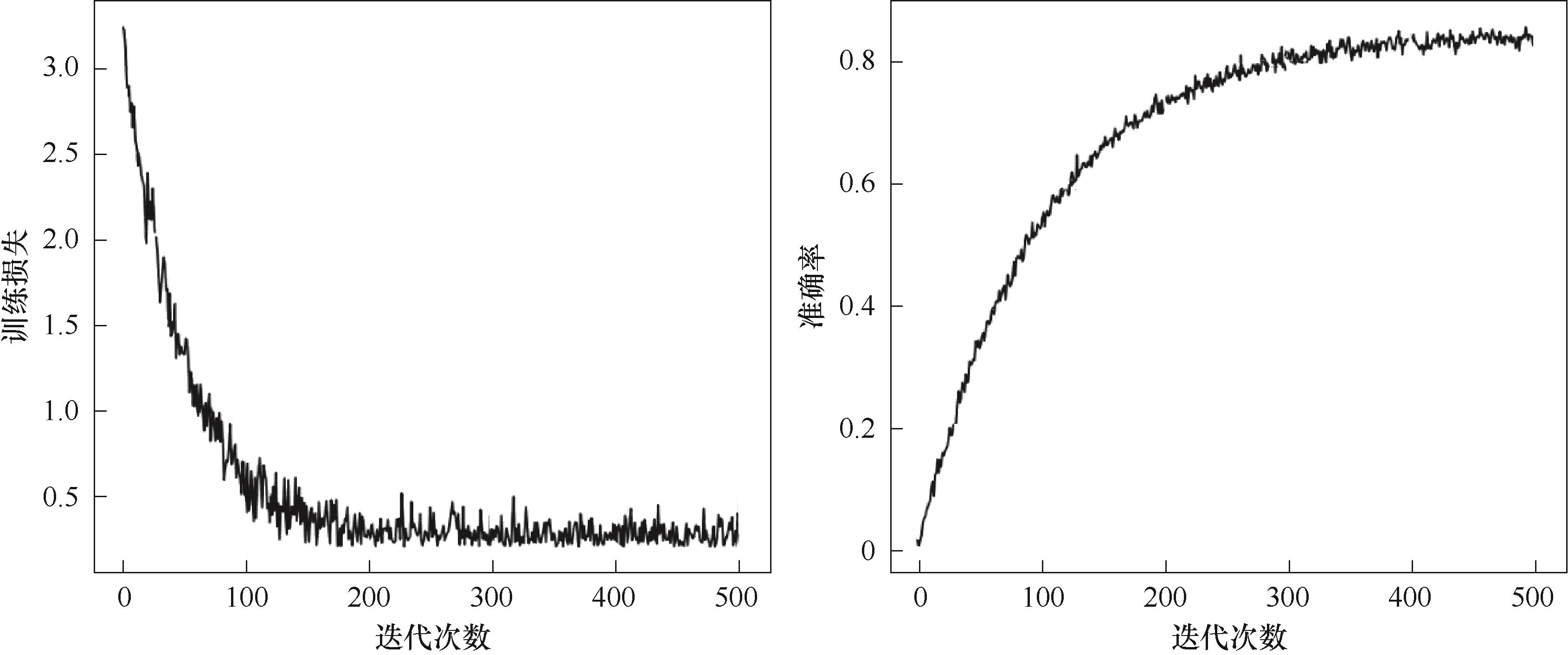

图12 乙烯裂解炉运行状态中等、不平衡比为10∶1时LSTM模型训练过程

Fig.12 LSTM model training process of ethylene cracking furnace in average working condition for unbalance ratio 10∶1

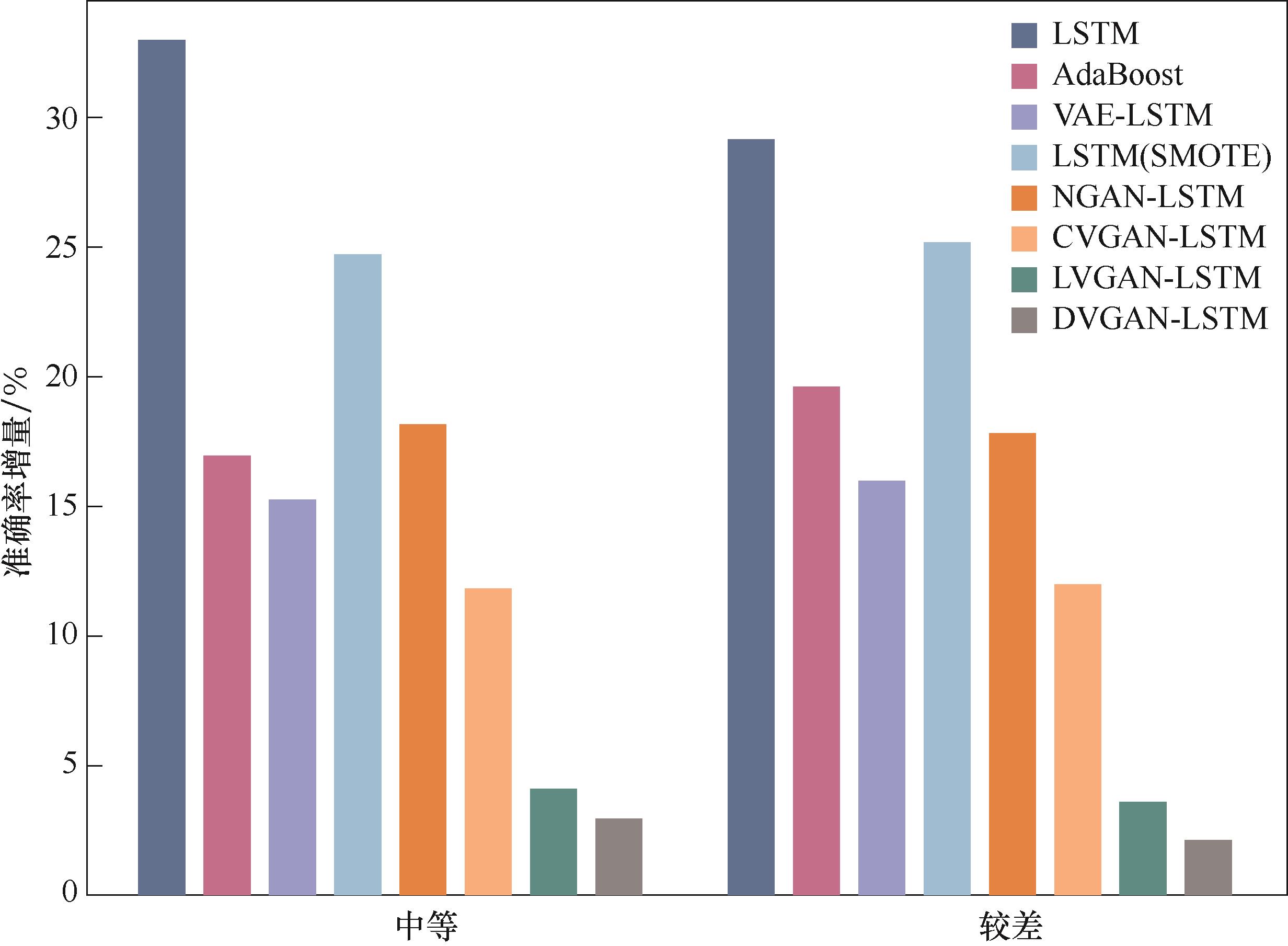

图13 乙烯裂解炉两种运行状态下不平衡比10∶1时性能评估的增量

Fig.13 Performance evaluation accuracy increment under two working conditions of ethylene cracking furnace for unbalance ratio 10∶1

| 不平衡比 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 40∶1 | 13.25% | 40.45% | 43.72% | 34.45% | 40.62% | 48.22% | 55.50% | 61.25% | 64.38% |

| 20∶1 | 30.42% | 52.63% | 64.25% | 47.55% | 53.64% | 60.25% | 70.48% | 72.42% | 76.15% |

| 10∶1 | 52.47% | 65.53% | 70.32% | 60.53% | 67.22% | 73.50% | 81.32% | 83.63% | 85.45% |

| 5∶1 | 73.23% | 77.32% | 83.50% | 76.45% | 82.89% | 84.85% | 89.25% | 90.35% | 92.40% |

| 2∶1 | 93.00% | 95.72% | 97.15% | 94.21% | 95.27% | 97.60% | 98.44% | 98.28% | 98.85% |

表18 乙烯裂解炉不同不平衡比下性能一般的评估准确度

Table 18 The evaluation accuracy of the ethylene cracking furnace performance in average working condition at different unbalance ratios

| 不平衡比 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 40∶1 | 13.25% | 40.45% | 43.72% | 34.45% | 40.62% | 48.22% | 55.50% | 61.25% | 64.38% |

| 20∶1 | 30.42% | 52.63% | 64.25% | 47.55% | 53.64% | 60.25% | 70.48% | 72.42% | 76.15% |

| 10∶1 | 52.47% | 65.53% | 70.32% | 60.53% | 67.22% | 73.50% | 81.32% | 83.63% | 85.45% |

| 5∶1 | 73.23% | 77.32% | 83.50% | 76.45% | 82.89% | 84.85% | 89.25% | 90.35% | 92.40% |

| 2∶1 | 93.00% | 95.72% | 97.15% | 94.21% | 95.27% | 97.60% | 98.44% | 98.28% | 98.85% |

| 不平衡比 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 40∶1 | 12.28% | 40.84% | 43.35% | 34.75% | 38.56% | 45.37% | 56.92% | 62.53% | 64.75% |

| 20∶1 | 28.53% | 54.46% | 55.48% | 46.28% | 50.25% | 57.62% | 65.84% | 71.12% | 74.25% |

| 10∶1 | 52.47% | 69.42% | 70.52% | 60.53% | 67.22% | 73.50% | 81.32% | 83.13% | 85.45% |

| 5∶1 | 72.50% | 82.32% | 84.92% | 74.64% | 81.73% | 85.92% | 88.25% | 92.25% | 94.40% |

| 2∶1 | 93.36% | 96.26% | 97.23% | 94.25% | 95.63% | 97.48% | 97.52% | 97.04% | 98.45% |

表19 乙烯裂解炉不同不平衡比下性能较差的评估准确度

Table 19 The evaluation accuracy of the ethylene cracking furnace performance in bad working condition at different unbalance ratios

| 不平衡比 | LSTM(no sampling) | AdaBoost | VAE- LSTM | LSTM (SMOTE) | NGAN-LSTM | CVGAN-LSTM | LVGAN-LSTM | DVGAN-LSTM | DVAE-WAFFN-GAN-LSTM |

|---|---|---|---|---|---|---|---|---|---|

| 40∶1 | 12.28% | 40.84% | 43.35% | 34.75% | 38.56% | 45.37% | 56.92% | 62.53% | 64.75% |

| 20∶1 | 28.53% | 54.46% | 55.48% | 46.28% | 50.25% | 57.62% | 65.84% | 71.12% | 74.25% |

| 10∶1 | 52.47% | 69.42% | 70.52% | 60.53% | 67.22% | 73.50% | 81.32% | 83.13% | 85.45% |

| 5∶1 | 72.50% | 82.32% | 84.92% | 74.64% | 81.73% | 85.92% | 88.25% | 92.25% | 94.40% |

| 2∶1 | 93.36% | 96.26% | 97.23% | 94.25% | 95.63% | 97.48% | 97.52% | 97.04% | 98.45% |

| 模型 | 模型训练 时间/s | 生成样本 时间/s | 生成单个样本时间/ms |

|---|---|---|---|

| VAE | 224 | 0.132 | 0.660 |

| NGAN | 298 | 0.208 | 1.040 |

| CVGAN | 673 | 0.437 | 2.180 |

| LVGAN | 564 | 0.412 | 2.060 |

| DVGAN | 826 | 0.784 | 3.920 |

| DVGAN-WAFFN | 1032 | 1.036 | 5.180 |

表20 乙烯裂解炉实验中不同算法生成稀少数据的时间复杂度

Table 20 The time complexity of generating sparse data by different algorithms in ethylene cracking furnace experiment

| 模型 | 模型训练 时间/s | 生成样本 时间/s | 生成单个样本时间/ms |

|---|---|---|---|

| VAE | 224 | 0.132 | 0.660 |

| NGAN | 298 | 0.208 | 1.040 |

| CVGAN | 673 | 0.437 | 2.180 |

| LVGAN | 564 | 0.412 | 2.060 |

| DVGAN | 826 | 0.784 | 3.920 |

| DVGAN-WAFFN | 1032 | 1.036 | 5.180 |

| 1 | Li W Q, Zhao C H, Gao F R. Linearity evaluation and variable subset partition based hierarchical process modeling and monitoring[J]. IEEE Transactions on Industrial Electronics, 2018, 65(3): 2683-2692. |

| 2 | Jiang Q C, Yan S F, Yan X F, et al. Data-driven two-dimensional deep correlated representation learning for nonlinear batch process monitoring[J]. IEEE Transactions on Industrial Informatics, 2020, 16(4): 2839-2848. |

| 3 | Liu Y, Chang Y Q, Wang F L. Online process operating performance assessment and nonoptimal cause identification for industrial processes[J]. Journal of Process Control, 2014, 24(10): 1548-1555. |

| 4 | Liu Y, Wang F L, Chang Y Q. Online fuzzy assessment of operating performance and cause identification of nonoptimal grades for industrial processes[J]. Industrial & Engineering Chemistry Research, 2013, 52(50): 18022-18030. |

| 5 | 刘强, 卓洁, 郎自强, 等. 数据驱动的工业过程运行监控与自优化研究展望[J]. 自动化学报, 2018, 44(11): 1944-1956. |

| Liu Q, Zhuo J, Lang Z Q, et al. Outlook on data-driven industrial process operation monitoring and self optimization research[J]. Journal of Automation, 2018, 44(11): 1944-1956. | |

| 6 | Wang M Y, Yan G Y, Fei Z Y. Kernel PLS based prediction model construction and simulation on theoretical cases[J]. Neurocomputing, 2015, 165: 389-394. |

| 7 | 王浩东, 王昕, 王振雷, 等. 基于Ms-NIPLS-GPR的化工过程性能等级评估方法[J]. 化工学报, 2021, 72(3): 1549-1556. |

| Wang H D, Wang X, Wang Z L, et al. Grading performance assessment method of chemical process based on Ms-NIPLS-GPR[J]. CIESC Journal, 2021, 72(3): 1549-1556. | |

| 8 | 曹晨鑫, 杜玉鹏, 王昕, 等. 基于Ms-LWPLS的化工过程网络化性能分级评估方法[J]. 化工学报, 2019, 70(S1): 141-149. |

| Cao C X, Du Y P, Wang X, et al. Networked grading performance assessment method of chemical process based on Ms-LWPLS[J]. CIESC Journal, 2019, 70(S1): 141-149. | |

| 9 | Wu H, Han Y M, Jin J Y, et al. Novel deep learning based on data fusion integrating correlation analysis for soft sensor modeling[J]. Industrial & Engineering Chemistry Research, 2021, 60(27): 10001-10010. |

| 10 | Li Y, Cao H T, Wang X, et al. A new correlation-similarity conjoint algorithm for developing encoder-decoder based deep learning multi-step prediction model of chemical process[J]. Chemical Engineering Science, 2024, 288: 119748. |

| 11 | Li Y, Li N, Ren J Z, et al. An interpretable light attention-convolution-gate recurrent unit architecture for the highly accurate modeling of actual chemical dynamic processes[J]. Engineering, 2024, 39: 104-116. |

| 12 | 褚菲, 傅逸灵, 赵旭, 等. 基于ISDAE模型的复杂工业过程运行状态评价方法及应用[J]. 自动化学报, 2021, 47(4): 849-863. |

| Chu F, Fu Y L, Zhao X, et al. Evaluation method and application of complex industrial process operation status based on ISDAE model[J] Journal of Automation, 2021, 47 (4): 849-863. | |

| 13 | Jiao J, Zhao M, Lin J, et al. Residual joint adaptation adversarial network for intelligent transfer fault diagnosis[J]. Mechanical Systems and Signal Processing, 2020, 145: 106962. |

| 14 | Chai Z, Zhao C H, Huang B. Multisource-refined transfer network for industrial fault diagnosis under domain and category inconsistencies[J]. IEEE Transactions on Cybernetics, 2022, 52(9): 9784-9796. |

| 15 | Huang Z L, Lei Z H, Wen G R, et al. A multisource dense adaptation adversarial network for fault diagnosis of machinery[J]. IEEE Transactions on Industrial Electronics, 2022, 69(6): 6298-6307. |

| 16 | Freund Y, Schapire R E. A decision-theoretic generalization of on-line learning and an application to boosting[J]. Journal of Computer and System Sciences, 1997, 55(1): 119-139. |

| 17 | Chawla N V, Lazarevic A, Hall L O, et al. SMOTEBoost: improving prediction of the minority class in boosting[M]//Lecture Notes in Computer Science. Berlin, Heidelberg: Springer Berlin Heidelberg, 2003: 107-119. |

| 18 | Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets[J/OL]. arXiv, 2014, . |

| 19 | Reed S, Akata Z, Yan X C, et al. Generative adversarial text to image synthesis[C]//33rd International Conference on Machine Learning. ICML, 2016, 3: 1681-1690. |

| 20 | Radford A, Metz L, Chintala S, et al. Unsupervised representation learning with deep convolutional generative adversarial networks[J/OL]. arXiv, 2015, . |

| 21 | Odena A, Olah C, Shlens J. Conditional image synthesis with auxiliary classifier GANs[J/OL]. arXiv, 2016, . |

| 22 | Wang R G, Zhang S H, Chen Z Y, et al. Enhanced generative adversarial network for extremely imbalanced fault diagnosis of rotating machine[J]. Measurement, 2021, 180: 109467. |

| 23 | Ma L, Ding Y, Wang Z, et al. An interpretable data augmentation scheme for machine fault diagnosis based on a sparsity-constrained generative adversarial network[J]. Expert Systems with Applications, 2021, 182: 115234. |

| 24 | Liu J, Zhang C, Jiang X. Imbalanced fault diagnosis of rolling bearing using improved MsR-GAN and feature enhancement-driven CapsNet[J]. Mechanical Systems and Signal Processing, 2022, 168: 108664. |

| 25 | Mirza M. Conditional generative adversarial nets[J/OL]. arXiv, 2014, . |

| 26 | Chen X., Duan Y., Houthooft R., et al. InfoGAN: interpretable representation learning by information maximizing generative adversarial nets[J/OL]. arXiv, 2016, . |

| 27 | Laubscher R, Rousseau P. Application of generative deep learning to predict temperature, flow and species distributions using simulation data of a methane combustor[J]. International Journal of Heat and Mass Transfer, 2020, 163: 120417. |

| 28 | Guo F, Xie R M, Huang B. A deep learning just-in-time modeling approach for soft sensor based on variational autoencoder[J]. Chemometrics and Intelligent Laboratory Systems, 2020, 197: 103922. |

| 29 | Qi Z F, Liu Q Q, Wang J, et al. Battle damage assessment based on an improved Kullback-Leibler divergence sparse autoencoder[J]. Frontiers of Information Technology & Electronic Engineering, 2017, 18(12): 1991-2000. |

| 30 | 张永宏, 张中洋, 赵晓平, 等. 基于VAE-GAN和FLCNN的不均衡样本轴承故障诊断方法[J]. 振动与冲击, 2022, 41(9): 199-209. |

| Zhang Y H, Zhang Z Y, Zhao X P, et al. Unbalanced sample bearing fault diagnosis method based on VAE-GAN and FLCNN[J]. Vibration and Shock, 2022, 41(9): 199-209. | |

| 31 | Goodfellow I J, Pouget-Abadie J, Mirza M, et al. Generative adversarial networks[J/OL]. arXiv, 2014,. |

| 32 | 闫琳琦, 王振雷. 基于STA-BiLSTM-LightGBM组合模型的多步预测软测量建模[J]. 化工学报, 2023, 74(8): 3407-3418. |

| Yan L Q, Wang Z L. Multi-step predictive soft sensor modeling based on STA-BiLSTM-LightGBM combined model[J]. CIESC Journal, 2023, 74(8): 3407-3418. | |

| 33 | 许泽坤, 付军, 高小永, 等. 基于拉普拉斯特征映射与加权极限学习机的电动潜油离心泵故障诊断方法[J]. 控制与信息技术, 2024(2): 117-125. |

| Xu Z K, Fu J, Gao X Y, et al. Fault diagnosis method for electric submersible centrifugal pump based on Laplace feature mapping and weighted limit learning machine[J]. Control and Information Technology, 2024(2): 117-125. | |

| 34 | Qiu H D, Liu Y, Subrahmanya N A, et al. Granger causality for time-series anomaly detection[C]//2012 IEEE 12th International Conference on Data Mining. IEEE, 2012: 1074-1079. |

| 35 | Kerezsi J. Computer simulation of an industrial ethane-cracking furnace operation[C]//2013 4th International Youth Conference on Energy (IYCE). IEEE, 2013: 1-5. |

| 36 | 李平, 李奇安, 雷荣孝, 等. 乙烯裂解炉先进控制系统开发与应用[J]. 化工学报, 2011, 62(8): 2216-2220. |

| Li P, Li Q A, Lei R X, et al. Development and application of advanced process control system for ethylene cracking heaters[J]. CIESC Journal, 2011, 62(8): 2216-2220. | |

| 37 | 江伟, 王昕, 王振雷. 基于LTSA和MICA与PCA联合指标的过程监控方法及应用[J]. 化工学报, 2015, 66(12): 4895-4903. |

| Jiang W, Wang X, Wang Z L. LTSA and combined index based MICA and PCA process monitoring and application[J]. CIESC Journal, 2015, 66(12): 4895-4903. |

| [1] | 赵武灵, 满奕. 基于变分编码器的纳米纤维素分子结构预测模型框架研究[J]. 化工学报, 2024, 75(9): 3221-3230. |

| [2] | 文华强, 孙全虎, 申威峰. 基于分子碎片化学空间的智能分子定向生成框架[J]. 化工学报, 2024, 75(4): 1655-1667. |

| [3] | 蒙西, 王岩, 孙子健, 乔俊飞. 基于注意力模块化神经网络的城市固废焚烧过程氮氧化物排放预测[J]. 化工学报, 2024, 75(2): 593-603. |

| [4] | 李文华, 叶洪涛, 罗文广, 刘乙奇. 基于MHSA-LSTM的软测量建模及其在化工过程中的应用[J]. 化工学报, 2024, 75(12): 4654-4665. |

| [5] | 温凯杰, 郭力, 夏诏杰, 陈建华. 一种耦合CFD与深度学习的气固快速模拟方法[J]. 化工学报, 2023, 74(9): 3775-3785. |

| [6] | 闫琳琦, 王振雷. 基于STA-BiLSTM-LightGBM组合模型的多步预测软测量建模[J]. 化工学报, 2023, 74(8): 3407-3418. |

| [7] | 高学金, 姚玉卓, 韩华云, 齐咏生. 基于注意力动态卷积自编码器的发酵过程故障监测[J]. 化工学报, 2023, 74(6): 2503-2521. |

| [8] | 吴心远, 刘奇磊, 曹博渊, 张磊, 都健. Group2vec:基于无监督机器学习的基团向量表示及其物性预测应用[J]. 化工学报, 2023, 74(3): 1187-1194. |

| [9] | 齐书平, 王文龙, 张磊, 都健. 基于深度学习的金属离子-有机配体配位稳定常数的预测[J]. 化工学报, 2022, 73(12): 5461-5468. |

| [10] | 袁壮, 凌逸群, 杨哲, 李传坤. 基于TA-ConvBiLSTM的化工过程关键工艺参数预测[J]. 化工学报, 2022, 73(1): 342-351. |

| [11] | 谢昊源, 黄群星, 林晓青, 李晓东, 严建华. 基于图像深度学习的垃圾热值预测研究[J]. 化工学报, 2021, 72(5): 2773-2782. |

| [12] | 陈忠圣, 朱梅玉, 贺彦林, 徐圆, 朱群雄. 基于分位数回归CGAN的虚拟样本生成方法及其过程建模应用[J]. 化工学报, 2021, 72(3): 1529-1538. |

| [13] | 于程远, 吴金奎, 周利, 吉旭, 戴一阳, 党亚固. 基于深度学习预测有机光伏电池能量转换效率[J]. 化工学报, 2021, 72(3): 1487-1495. |

| [14] | 王晓慧, 王延江, 邓晓刚, 张政. 基于加权深度支持向量数据描述的工业过程故障检测[J]. 化工学报, 2021, 72(11): 5707-5716. |

| [15] | 尹林子, 关羽吟, 蒋朝辉, 许雪梅. 基于k-means++的高炉铁水硅含量数据优选方法[J]. 化工学报, 2020, 71(8): 3661-3670. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||

京公网安备 11010102001995号

京公网安备 11010102001995号