化工学报 ›› 2022, Vol. 73 ›› Issue (3): 1270-1279.DOI: 10.11949/0438-1157.20211291

收稿日期:2021-09-05

修回日期:2021-11-09

出版日期:2022-03-15

发布日期:2022-03-14

通讯作者:

王振雷

作者简介:罗顺桦(1997—),男,硕士研究生,基金资助:

Shunhua LUO1( ),Zhenlei WANG1(

),Zhenlei WANG1( ),Xin WANG2

),Xin WANG2

Received:2021-09-05

Revised:2021-11-09

Online:2022-03-15

Published:2022-03-14

Contact:

Zhenlei WANG

摘要:

在工业过程中,存在着辅助变量与主导变量数据比例严重失衡的问题。协同训练算法是其中一种利用无标签数据中的潜在信息以提升学习性能的模型训练方法。然而目前在协同训练软测量建模过程中,学习器之间存在严重的训练特性交叉重叠的问题,这将导致对主导变量的预测性能衰减。针对这一问题,提出基于二子空间协同训练算法的半监督软测量模型two-subspace co-training KNN(TSCO-KNN)。该模型将二子空间分块算法与协同训练算法相结合,利用辅助变量与主成分子空间PCS和残差子空间RS两个特征子空间的相关性程度,将数据变量拆分为两个具有显著差异性的学习数据集,进而使用KNN回归器进行协同训练,共同用于对主导变量的预测。最后在乙烯精馏塔塔顶乙烷浓度和TE过程产品浓度软测量中进行仿真研究,验证本文所提算法的有效性。

中图分类号:

罗顺桦, 王振雷, 王昕. 基于二子空间协同训练算法的半监督软测量建模[J]. 化工学报, 2022, 73(3): 1270-1279.

Shunhua LUO, Zhenlei WANG, Xin WANG. Semi-supervised soft sensor modeling based on two-subspace co-training algorithm[J]. CIESC Journal, 2022, 73(3): 1270-1279.

算法流程: 基于二子空间协同训练模型TSCO-KNN |

|---|

输入: 有标签数据集 流程: 使用二子空间TS把数据集 (a) 对数据集 (b) 使用PCA降维得到PCS和RS (c) 计算各辅助变量与PCS和RS的相关性系数 (d) 分别计算 (e) 通过与阈值的比较划分出 (f) 取 While ( { 对于每一个无标签数据 If ( {取最大的 Else { End 得到最终模型,并进行预测 |

表1 基于二子空间协同训练模型TSCO-KNN的算法流程

Table 1 Algorithm based on two-subspace collaborative training model TSCO-KNN

算法流程: 基于二子空间协同训练模型TSCO-KNN |

|---|

输入: 有标签数据集 流程: 使用二子空间TS把数据集 (a) 对数据集 (b) 使用PCA降维得到PCS和RS (c) 计算各辅助变量与PCS和RS的相关性系数 (d) 分别计算 (e) 通过与阈值的比较划分出 (f) 取 While ( { 对于每一个无标签数据 If ( {取最大的 Else { End 得到最终模型,并进行预测 |

图2 有标签比例为50%的co-training KNN(1)、co-training KNN(2)、TSCO-KNN模型效果对比

Fig.2 Comparison of the effects of co-training KNN(1), co-training KNN(2), and TSCO-KNN models with a label ratio of 50%

| 成分 | 模型 | RMSE | MSE | MAE |

|---|---|---|---|---|

| D | co-traning KNN(1) | 1.0488 | 1.1000 | 0.8400 |

| co-traning KNN(2) | 1.0003 | 1.0006 | 0.8107 | |

| TSCO-KNN | 0.6474 | 0.4191 | 0.5264 | |

| E | co-traning KNN(1) | 1.0566 | 1.1164 | 0.8517 |

| co-traning KNN(2) | 1.0200 | 1.0404 | 0.8289 | |

| TSCO-KNN | 0.6829 | 0.4664 | 0.5469 | |

| F | co-traning KNN(1) | 1.1072 | 1.2259 | 0.8725 |

| co-traning KNN(2) | 1.0424 | 1.0866 | 0.8300 | |

| TSCO-KNN | 0.6695 | 0.4482 | 0.5228 |

表2 有标签比例为50%下三种模型的性能评估(TE)

Table 2 Evaluation (TE) of the three models with a label ratio of 50%

| 成分 | 模型 | RMSE | MSE | MAE |

|---|---|---|---|---|

| D | co-traning KNN(1) | 1.0488 | 1.1000 | 0.8400 |

| co-traning KNN(2) | 1.0003 | 1.0006 | 0.8107 | |

| TSCO-KNN | 0.6474 | 0.4191 | 0.5264 | |

| E | co-traning KNN(1) | 1.0566 | 1.1164 | 0.8517 |

| co-traning KNN(2) | 1.0200 | 1.0404 | 0.8289 | |

| TSCO-KNN | 0.6829 | 0.4664 | 0.5469 | |

| F | co-traning KNN(1) | 1.1072 | 1.2259 | 0.8725 |

| co-traning KNN(2) | 1.0424 | 1.0866 | 0.8300 | |

| TSCO-KNN | 0.6695 | 0.4482 | 0.5228 |

| Model | RMSE | MSE | MAE |

|---|---|---|---|

| co-traning KNN(1) | 0.1832 | 0.0336 | 0.1333 |

| co-traning KNN(2) | 0.1374 | 0.0189 | 0.1013 |

| TSCO-KNN | 0.1108 | 0.0123 | 0.0858 |

表3 有标签比例为10%下三种模型的性能评估

Table 3 Evaluation of the three models with a label ratio of 10%

| Model | RMSE | MSE | MAE |

|---|---|---|---|

| co-traning KNN(1) | 0.1832 | 0.0336 | 0.1333 |

| co-traning KNN(2) | 0.1374 | 0.0189 | 0.1013 |

| TSCO-KNN | 0.1108 | 0.0123 | 0.0858 |

| Model | RMSE | MSE | MAE |

|---|---|---|---|

| co-traning KNN(1) | 0.1584 | 0.0251 | 0.1134 |

| co-traning KNN(2) | 0.1213 | 0.0147 | 0.0901 |

| TSCO-KNN | 0.0857 | 0.0074 | 0.0667 |

表4 有标签比例为20%下三种模型的性能评估

Table 4 Evaluation of the three models with a label ratio of 20%

| Model | RMSE | MSE | MAE |

|---|---|---|---|

| co-traning KNN(1) | 0.1584 | 0.0251 | 0.1134 |

| co-traning KNN(2) | 0.1213 | 0.0147 | 0.0901 |

| TSCO-KNN | 0.0857 | 0.0074 | 0.0667 |

| Model | RMSE | MSE | MAE |

|---|---|---|---|

| co-traning KNN(1) | 0.1369 | 0.0187 | 0.0987 |

| co-traning KNN(2) | 0.1010 | 0.0102 | 0.0740 |

| TSCO-KNN | 0.0647 | 0.0042 | 0.0499 |

表5 有标签比例为50%下三种模型的性能评估

Table 5 Evaluation of the three models with a label ratio of 50%

| Model | RMSE | MSE | MAE |

|---|---|---|---|

| co-traning KNN(1) | 0.1369 | 0.0187 | 0.0987 |

| co-traning KNN(2) | 0.1010 | 0.0102 | 0.0740 |

| TSCO-KNN | 0.0647 | 0.0042 | 0.0499 |

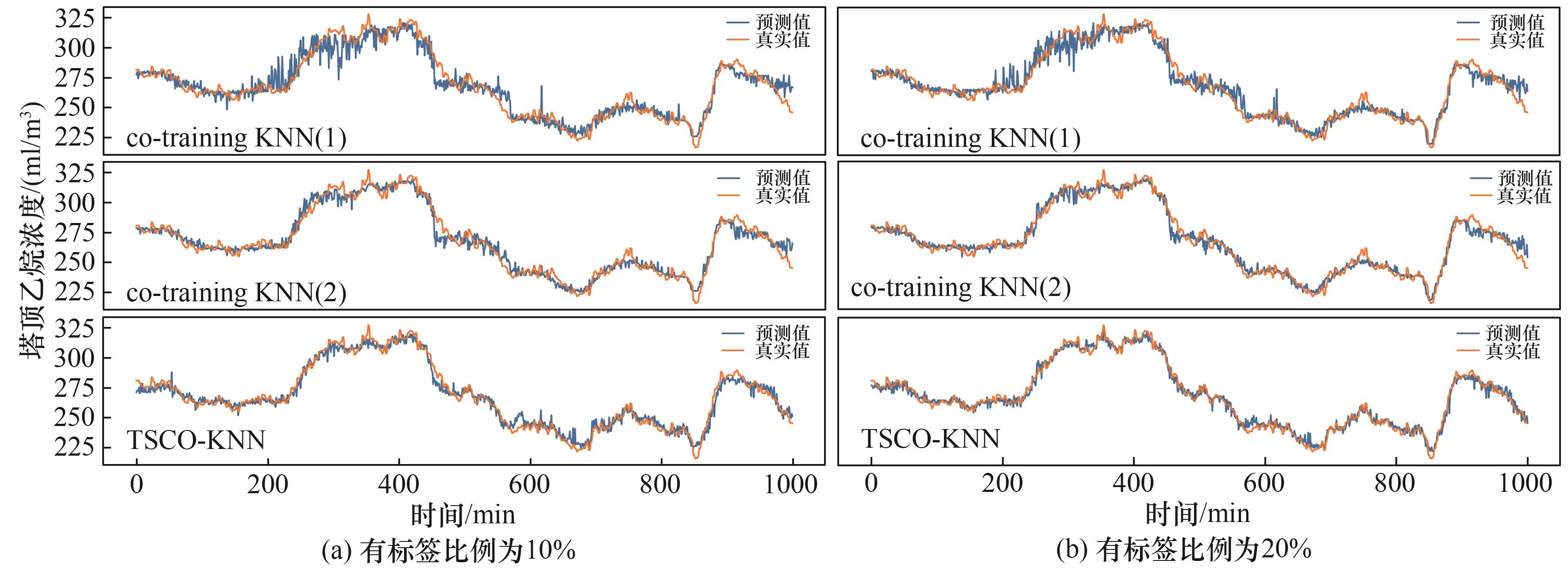

图5 有标签比例为10%和20%下的co-training KNN(1)、co-training KNN(2)、TSCO-KNN模型效果对比

Fig.5 Comparison of co-training KNN(1), co-training KNN(2), TSCO-KNN model effects with label ratios of 10% and 20%

图6 有标签比例为50%下的co-training KNN(1)、co-training KNN(2)、TSCO-KNN模型效果对比和差值对比

Fig.6 Comparison of co-training KNN(1), co-training KNN(2), TSCO-KNN model effect and difference with a label ratio of 50%

| 1 | 俞金寿. 软测量技术及其应用[J]. 自动化仪表, 2008, 29(1): 1-7. |

| Yu J S. Soft sensing technology and its application[J]. Process Automation Instrumentation, 2008, 29(1): 1-7. | |

| 2 | 邱禹, 刘乙奇, 吴菁, 等. 基于深层神经网络的多输出自适应软测量建模[J]. 化工学报, 2018, 69(7): 3101-3113. |

| Qiu Y, Liu Y Q, Wu J, et al. A self-adaptive multi-output soft sensor modeling based on deep neural network[J]. CIESC Journal, 2018, 69(7): 3101-3113. | |

| 3 | Sarkar P, Gupta S K. Steady state simulation of continuous-flow stirred-tank slurry propylene polymerization reactors[J]. Polymer Engineering & Science, 1992, 32(11): 732-742. |

| 4 | 姚科田, 邵之江, 陈曦, 等. 基于数据驱动技术和工艺机理模型的PTA生产过程软测量建模方法[J]. 计算机与应用化学, 2010, 27(10): 1329-1332. |

| Yao K T, Shao Z J, Chen X, et al. Data-driven technology and mechanism model based soft sensor modeling in PTA process[J]. Computers and Applied Chemistry, 2010, 27(10): 1329-1332. | |

| 5 | 朱鹏飞, 夏陆岳, 潘海天. 基于改进Kalman滤波算法的多模型融合建模方法[J]. 化工学报, 2015, 66(4): 1388-1394. |

| Zhu P F, Xia L Y, Pan H T. Multi-model fusion modeling method based on improved Kalman filtering algorithm[J]. CIESC Journal, 2015, 66(4): 1388-1394. | |

| 6 | Da-Zhi E, Pan F, Chen D L, et al. Fuzzy neural network control for nonlinear networked control system[C]//2009 Chinese Control and Decision Conference. Guilin: IEEE, 2009: 1569-1573. |

| 7 | 谢代梁, 王保良, 黄志尧, 等. 主成分回归在中药过程软测量中的应用研究[J]. 仪器仪表学报, 2004, 25(S2): 671-672. |

| Xie D L, Wang B L, Huang Z Y, et al. Application of principle component regression to soft-sensing of Chinese traditional medicine production process[J]. Chinese Journal of Scientific Instrument, 2004, 25(S2): 671-672. | |

| 8 | Zhao C, Chen Z Q, Chen X Y. A soft sensor modeling for aromatics yield based on adaptive weighted least squares support vector machine[J]. Computers and Applied Chemistry, 2019, 36(3): 255-264. |

| 9 | 丁续达, 金秀章, 张扬. 基于最小二乘支持向量机的改进型在线NO x 预测模型[J]. 热力发电, 2019, 48(1): 61-67. |

| Ding X D, Jin X Z, Zhang Y. An improved online NO x prediction model based on LSSVM[J]. Thermal Power Generation, 2019, 48(1): 61-67. | |

| 10 | 马建, 邓晓刚, 王磊. 基于深度集成支持向量机的工业过程软测量方法[J]. 化工学报, 2018, 69(3): 1121-1128. |

| Ma J, Deng X G, Wang L. Industrial process soft sensor method based on deep learning ensemble support vector machine[J]. CIESC Journal, 2018, 69(3): 1121-1128. | |

| 11 | Xibilia M G, Latino M, Marinković Z, et al. Soft sensors based on deep neural networks for applications in security and safety[J]. IEEE Transactions on Instrumentation and Measurement, 2020, 69(10): 7869-7876. |

| 12 | Gaurav K, Kailash S. Dynamic neural network based sensing and controlling a reactive distillation column having inverse response[J]. Theoretical Foundations of Chemical Engineering, 2021, 55(1): 167-179. |

| 13 | Zhu X J, Goldberg A B. Introduction to semi-supervised learning[J]. Synthesis Lectures on Artificial Intelligence and Machine Learning, 2009, 3(1): 1-130. |

| 14 | Zhou Z H, Li M. Semi-supervised learning by disagreement[J]. Knowledge and Information Systems, 2010, 24(3): 415-439. |

| 15 | 周乐, 宋执环, 侯北平, 等. 一种鲁棒半监督建模方法及其在化工过程故障检测中的应用[J]. 化工学报, 2017, 68(3): 1109-1115. |

| Zhou L, Song Z H, Hou B P, et al. Robust semi-supervised modelling method and its application to fault detection in chemical processes[J]. CIESC Journal, 2017, 68(3): 1109-1115. | |

| 16 | Li C X, Zhu J, Zhang B. Max-margin deep generative models for (semi -) supervised learning[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(11): 2762-2775. |

| 17 | Luo J F, Xu H T, Su Z Q. Fault diagnosis method based semi-supervised manifold learning and transductive SVM[C]//2017 International Conference on Sensing, Diagnostics, Prognostics, and Control (SDPC). Shanghai: IEEE, 2017: 710-717. |

| 18 | Tu E M, Zhang Y Q, Zhu L, et al. A graph-based semi-supervised k nearest-neighbor method for nonlinear manifold distributed data classification[J]. Information Sciences, 2016, 367/368: 673-688. |

| 19 | Blum A, Mitchell T. Combining labeled and unlabeled data with co-training[C]//Proceedings of the Eleventh Annual Conference on Computational Learning Theory-COLT' 98. New York: ACM Press, 1998: 92-100. |

| 20 | Shahshahani B M, Landgrebe D A. The effect of unlabeled samples in reducing the small sample size problem and mitigating the Hughes phenomenon[J]. IEEE Transactions on Geoscience and Remote Sensing, 1994, 32(5): 1087-1095. |

| 21 | Pierce D, Cardie C. Limitations of co-training for natural language learning from large datasets[C]//Proceedings of the 2001 Conference on Empirical Methods in Natural Language Processing. Pittsburgh, PA, USA, 2001. |

| 22 | Steedman M, Osborne M, Sarkar A, et al. Bootstrapping statistical parsers from small datasets[C]//Proceedings of the Tenth Conference on European Chapter of the Association for Computational Linguistics-EACL'03. NJ, USA: Association for Computational Linguistics, 2003. |

| 23 | Zhou Z H, Chen K J, Jiang Y. Exploiting unlabeled data in content-based image retrieval[M]//Machine Learning: ECML 2004. Berlin, Heidelberg: Springer, 2004: 525-536. |

| 24 | Goldman S, Zhou Y.Enhancing supervised learning with unlabeled data[C]//Proceedings of the 17th International Conference on Machine Learning. Stanford, CA, USA:Stanford University, 2000: 327-334. |

| 25 | Zhou Z H, Li M. Tri-training: exploiting unlabeled data using three classifiers[J]. IEEE Transactions on Knowledge and Data Engineering, 2005, 17(11): 1529-1541. |

| 26 | Zhou Z H, Li M.Semi-supervised regression with co-training[C]//Proceedings of the Nineteenth International Joint Conference on Artificial Intelligence. Edinburgh, Scotland, UK, 2005: 908-913. |

| 27 | Bao L, Yuan X F, Ge Z Q. Co-training partial least squares model for semi-supervised soft sensor development[J]. Chemometrics and Intelligent Laboratory Systems, 2015, 147: 75-85. |

| 28 | 李东, 黄道平, 刘乙奇. 基于协同训练的半监督异构自适应软测量建模方法的研究[J]. 化工学报, 2020, 71(5): 2128-2138. |

| Li D, Huang D P, Liu Y Q. Research on semi-supervised heterogeneous adaptive co-training soft-sensor model[J]. CIESC Journal, 2020, 71(5): 2128-2138. | |

| 29 | 童楚东. 基于特征提取与信息融合的工业过程监测研究[D]. 上海: 华东理工大学, 2015. |

| Tong C D. Industrial process monitoring based on feature extraction and information fusion[D]. Shanghai: East China University of Science and Technology, 2015. | |

| 30 | Downs J J, Vogel E F. A plant-wide industrial process control problem[J]. Computers & Chemical Engineering, 1993, 17(3): 245-255. |

| [1] | 闫琳琦, 王振雷. 基于STA-BiLSTM-LightGBM组合模型的多步预测软测量建模[J]. 化工学报, 2023, 74(8): 3407-3418. |

| [2] | 邵伟明, 韩文学, 宋伟, 杨勇, 陈灿, 赵东亚. 基于分布式贝叶斯隐马尔可夫回归的动态软测量建模方法[J]. 化工学报, 2023, 74(6): 2495-2502. |

| [3] | 刘聪, 谢莉, 杨慧中. 基于改进DPC的青霉素发酵过程多模型软测量建模[J]. 化工学报, 2021, 72(3): 1606-1615. |

| [4] | 陈忠圣, 朱梅玉, 贺彦林, 徐圆, 朱群雄. 基于分位数回归CGAN的虚拟样本生成方法及其过程建模应用[J]. 化工学报, 2021, 72(3): 1529-1538. |

| [5] | 于仙毅, 巫江虹, 高云辉. 基于主成分分析与支持向量机的热泵系统制冷剂泄漏识别研究[J]. 化工学报, 2020, 71(7): 3151-3164. |

| [6] | 李东, 黄道平, 刘乙奇. 基于协同训练的半监督异构自适应软测量建模方法的研究[J]. 化工学报, 2020, 71(5): 2128-2138. |

| [7] | 杜宇浩, 阎高伟, 李荣, 王芳. 基于局部线性嵌入的测地线流式核多工况软测量建模方法[J]. 化工学报, 2020, 71(3): 1278-1287. |

| [8] | 杨逸俊,王振雷,王昕. 基于最近邻与神经网络融合模型的软测量建模方法[J]. 化工学报, 2020, 71(12): 5696-5705. |

| [9] | 代学志,熊伟丽. 基于核极限学习机的快速主动学习方法及其软测量应用[J]. 化工学报, 2020, 71(11): 5226-5236. |

| [10] | 侯延彬,高宪文,李翔宇. 采油过程多尺度状态特征生成的有杆泵动态液面预测[J]. 化工学报, 2019, 70(S2): 311-321. |

| [11] | 廉小亲, 王俐伟, 安飒, 魏伟, 刘载文. 基于SOM-RBF神经网络的COD软测量方法[J]. 化工学报, 2019, 70(9): 3465-3472. |

| [12] | 秦美华, 朱红求, 李勇刚, 陈俊名, 张凤雪, 李文婷. 基于STA-K均值聚类的电化学废水处理过程离子浓度软测量[J]. 化工学报, 2019, 70(9): 3458-3464. |

| [13] | 吴菁, 刘乙奇, 刘坚, 黄道平, 邱禹, 于广平. 基于动态多核相关向量机的软测量建模研究[J]. 化工学报, 2019, 70(4): 1472-1484. |

| [14] | 唐俊苗, 俞海珍, 史旭华, 童楚东. 基于潜变量自回归算法的化工过程动态监测方法[J]. 化工学报, 2019, 70(3): 987-994. |

| [15] | 吉文鹏, 杨慧中. 基于改进扩张搜索聚类算法的多流形软测量建模[J]. 化工学报, 2019, 70(2): 723-729. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||

京公网安备 11010102001995号

京公网安备 11010102001995号